Refreshing our UX critique to create a more substantial design practice

Why did we need to change our Design Review?

When I joined AO.com’s UX Design team, our weekly critique sessions lacked clarity and purpose. If I’m honest with myself, it has done for some years. Even while I was an I.C. on the team. Instead of deeply engaging with design challenges, Design reviews felt like general project updates, lacking accountability, structure, and tangible outcomes.

"Design reviews felt like status updates, missing the opportunity to challenge and refine our designs genuinely."

What Wasn't Working

Several clear gaps became apparent after we started to look at our Design Review session with a critical eye:

Critique sessions generally lacked preparation from the designer, leading to inconsistent feedback and quality of their demos.

Discussions favoured vocal team members, leaving quieter designers' perspectives unheard.

No formal documentation or tracking limited accountability. It also didn’t help us link the work shown with progress in the overall project. Our Design review sessions felt very separate from the project plan and our stakeholders’ and customers’ opinions.

Sessions didn't adequately support managerial oversight and quality control, as the sessions created no data.

Setting out my strategy for improving the critique experience.

My strategy aimed to create a structured environment that aided different learning systems and fostered meaningful collaboration. The collaboration was key. I want the team to learn more from one and other than they were currently doing.

Strategies:

Structure - Create a supportive environment that encourages thoughtful preparation.

Forms of Feedback - Enhance feedback quality by combining written and verbal forms.

Significant challenges - Shift from status updates to tackling each designer’s most significant design challenge that week.

Test & Learn with data - Improve understanding of the team by tracking and overseeing without adding unnecessary complexity.

Finding the correct UX design review format for us

When structuring a 90-minute UX design review, I explored four approaches, each balancing participation, efficiency, and feedback depth. The goal was to ensure valuable discussions without rushing while ensuring most designers received feedback in each session.

Option 1: Round-Robin Feedback (Everyone Gets Reviewed)

Best for: Ensuring every designer gets input, fostering equal participation.

Downside: Feedback may be less in-depth due to time constraints.

Pros: Ensures all six designers receive feedback in each session. Keeps the session fair and inclusive. Encourages team-wide engagement.

Cons: Feedback can feel rushed (only ~8 min per designer). Not enough time for deep discussions or problem-solving. It might not provide actionable insights if the review is too quick.

My analysis: Trialing this would test efficiency vs. feedback depth, but it felt too fast-paced for meaningful discussion.

Option 2: Deep Dive (Fewer People, More Depth)

Best for: Improving feedback quality by allowing more discussion time per designer.

Downside: Not everyone gets reviewed in each session.

Pros: Provides in-depth feedback with 40 minutes per designer. Allows time for structured discussions and problem-solving. More opportunities for designers to ask questions and iterate.

Cons: Only two designers get reviewed per session, meaning others may wait weeks for feedback. Limits the number of projects being critiqued, potentially slowing team progress. It is harder to maintain engagement for those not presenting work.

My analysis: This would be an excellent format for high-stakes projects, but I needed something more balanced to keep momentum across the team.

Option 3: Small Groups (Breakout Critiques for Focused Input)

Best for: Balancing participation & feedback depth by allowing focused discussions in smaller groups.

Downside: Designers don’t get feedback from the whole team at once.

Pros: Creates focused discussions, allowing for better critique. More designers (3-4) can receive feedback in a session. Encourages peer-to-peer learning within smaller groups.

Cons: Designers don’t get feedback from the full team, just their small group. Risk of inconsistent feedback quality depending on group composition. Some team members may feel left out of key discussions.

My Analysis: This had potential but felt too fragmented—I wanted discussions where the full team could contribute

Option 4: Rotating Spotlights (1-2 Designers Get Full Team Attention Each Week)

Best for: Deep feedback while keeping reviews sustainable over time.

Downside: Designers may wait longer for their turn.

Pros: It allows for high-quality input with the entire team contributing. It prevents sessions from feeling rushed, and designers get valuable, actionable insights.

Cons: Only 1-2 designers are reviewed per session, meaning some might wait too long. The format may slow down the team's iteration cycles.

My Analysis: This format ensures quality but doesn’t provide feedback fast enough for a team that needs quicker iteration.

The chosen approach

After considering all four formats, I trial a structure where 3-4 designers present per session. This approach offers

A balance between speed and depth—each designer gets 20-25 minutes, which keeps discussions meaningful but efficient. Healthy, structured discussions ensure we move on quickly and cover multiple projects. More designers receive feedback each session, reducing bottlenecks and keeping momentum.

This structure avoids the rushed nature of Round-Robin while ensuring more designers get feedback than Deep Dive or Rotating Spotlights. It also keeps the whole team engaged, unlike Small Groups, where discussions are fragmented.

By trialling this approach, I can refine it further based on how the team responds—potentially adjusting the number of designers per session or introducing elements of other formats if needed.

The new Design review format in detail

Inspired by best practices and the insight we gathered from the team (Sneak Peak Design, which provided insight into the methods used by top design teams, and Laura Klein’s Time to Think method, which helped shape the structure and environment of our critique sessions) we drafted an agenda:

Each session begins with team updates and an 'around-the-room' moment where designers share wins from the past week. This helps set a positive tone before diving into design critique.

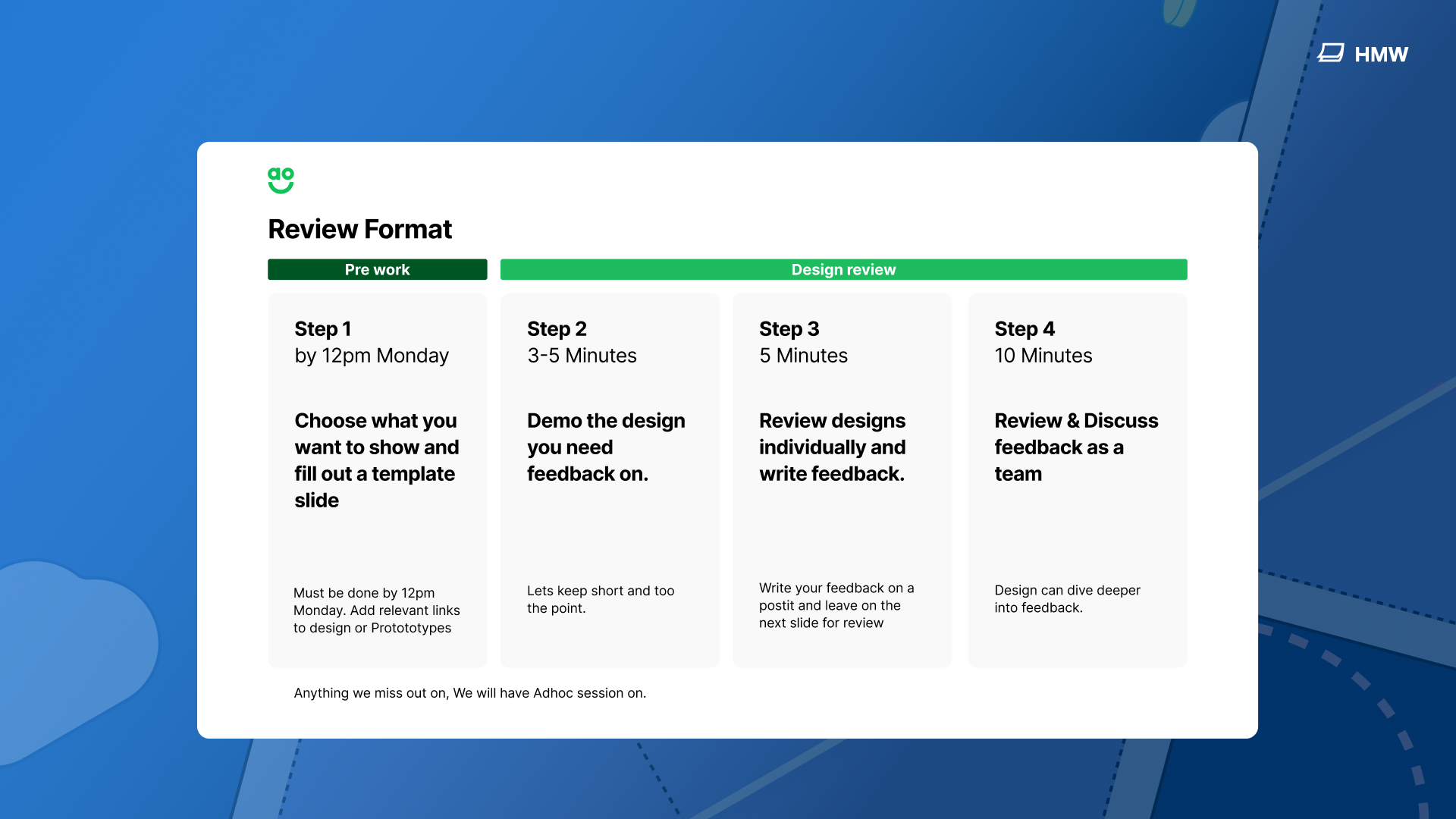

Below is the structured critique process introduced at AO.com, ensuring designers prepare in advance, present concisely, and receive both written and verbal feedback in a structured way.

Preparation: Designers submit brief, focused slide decks before the session.

Focused Presentation: Concise 2-3 minute overviews of the challenge.

Silent Feedback: Independent written feedback to encourage diverse perspectives.

Collaborative Discussion: Verbal sharing and exploration of feedback to enhance insights.

Choosing the Right Collaborative Environment

The team is distributed across the UK; I needed a remote-friendly tool to create a collaborative design critique environment. Miro and FigJam were ideal candidates, providing real-time interaction and shared digital spaces. However, neither was available to the team due to licensing constraints.

Other options included

Microsoft Whiteboard, which was accessible but had a poor user experience, making feedback Sessions are more frustrating than productive.

Figma itself, which was collaborative but cluttered with design elements, making it harder to focus on critique rather than prototyping.

PowerPoint or other Microsoft tools, which lacked true real-time collaboration.

The best available option was Figma Slides—a structured, collaborative workspace that allowed us to present work in an easy-to-follow, focused format. While not a perfect solution, it was good enough to get started quickly, and we planned to trial FigJam as soon as it became available.

Outcomes on the design team

"The new structure helps me clearly see what needs improving and makes me feel confident presenting my ideas." – Designer

Below is an example of a design critique output, showcasing structured discussions, ideation sessions, and actionable design challenges explored by the team.

The structured approach immediately impacted the design team:

Attendance remained consistently high, averaging approximately 90% each session.

Around 50% of designers regularly demonstrated their work during sessions.

Designers consistently prepared slides for critique sessions, averaging two monthly contributions per designer.

Post-critique "design jams" emerged, with designers proactively scheduling follow-up sessions, fostering deeper collaboration.

Outcomes for the business & our stakeholder:

"Since the UX team refined their design critique process, we’ve noticed ideas come back sharper and more well-rounded. Decisions are easier, approvals are faster, and we’re seeing better alignment across teams."

One of the most significant shifts is how design critique feeds into broader business discussions. As a result of the improved process:

Designers enter stakeholder reviews more prepared and confident, leading to faster approvals and better alignment.

Stakeholders now recognise the value of critique, often requesting that designs be taken back For further iteration before finalising decisions.

Handover to developers has improved, with fewer last-minute design fixes and a more collaborative approach to execution.

Challenges

At launch, the design review process started extremely strong—engagement was high, and demand for a slot was at capacity. Designers consistently prepared content, contributed slides, and actively participated in discussions. The critique structure resonated with the team, and the process was seen as valuable.

However, as time progressed, a shift occurred. Attendance remained high, but fewer designers created content or requested a review slot. This was an unexpected challenge, and I needed to dig into The root cause.

To investigate, I turned to our Microsoft DevOps (ADO) board, which provided a clearer view of where designers were within their projects. The data revealed three major strategic projects with development teams had entered the handover phase. At this stage, designers no longer worked on new concepts but supported development, refining, and troubleshooting build-related design issues. Naturally, this meant there was less work to bring to critique sessions.

Another key factor was the slowdown in small change requests. Due to the high demand on tech teams, smaller design iterations had paused, reducing the pipeline of design tasks available for critique.

A secondary challenge has emerged around facilitation ownership. Currently, I am the sole facilitator for design critique sessions. Out of the 12 sessions so far, I have personally led 11 of them, which isn’t a sustainable or scalable practice.

Looking ahead to the next quarter, I prioritise transitioning this responsibility to a design lead or a team member passionate about critique facilitation.

Key Learnings & Adaptations

Tracking engagement was invaluable. The decision to log participation and track trends using an Excel document allowed me to analyse and act on engagement shifts rather than relying on assumptions.

Reframing design review participation. I reassured the team that the decline in critique slots wasn’t a failure but a reflection of project cycles. Even if fewer people presented, the sessions remained valuable.

Adjusting session length. Instead of forcing full-length critiques, I shortened sessions based on participation levels, which was well received by the team.

Proactively sourcing new work. Recognising the lull, I worked with stakeholders across the business to identify new projects earlier, ensuring a steady pipeline of design work flowing into critique.

Building facilitation redundancy. Moving forward, I will identify and mentor new team facilitators to create a more resilient and scalable critique process.

Since then, we’ve seen an uptick in critique content and an increase in new design challenges as New work has entered the team. Design critique has become a litmus test for overall team workload and workflow health, providing an early indicator of whether projects are progressing smoothly or if intervention is needed.

We faced minimal resistance. The key ongoing challenge was maintaining enthusiasm during quieter periods. To maintain engagement, sessions remain strictly design-focused and end early if there’s limited content, preventing them from reverting to project updates.

Building a collaborative culture,

"Having structured feedback sessions means I now regularly block out time to prepare, leading to better critiques and better designs." – Designer

The changes fostered a more cooperative and supportive team environment:

Spontaneous collaborative sessions (design jams) became regular, encouraging cross-project Collaboration.

Manager feedback became structured, actionable, and documented weekly to guide continuous improvement.

Confidence increased among all team members, enhancing their ability to give and receive constructive feedback.

Lessons I learned as a Leader

Smaller, regular improvements could be more effective and sustainable than major overhauls like we did on this. But it worked out in this case. The risk was low as it was easy to revert if we wanted to.

Recognizing different communication styles is crucial for creating an inclusive environment for feedback. We must mix up how we get feedback, as one size won’t fit all. It’s the case the design Crit.

Structured critique sessions significantly boost the team's collective design craft. I’m seeking more opportunities to standardise and define other key UX Team activities.

The advice I’d give to other design leaders:

Structured design critique is foundational for improving team maturity and design excellence. If your critiques are ineffective, start small, iterate regularly, and actively seek feedback. Practical critique sessions foster better design outcomes and a stronger, more collaborative team culture.

Track Your Changes with Data: One of the most valuable things I did during this process was creating a simple dataset to track engagement. Using an Excel document, I logged participation, content creation, and attendance using a mix of structured yes/no questions and free-text observations.

Time Investment vs. Benefit: The tracking process took less than two minutes per session, but the insights gained were invaluable.

Identifying Trends & Taking Action: The dataset helped me quickly pinpoint shifts in participation, allowing me to make data-driven adjustments—such as shortening sessions, refocusing critique discussions, and identifying where new work was needed.

Enhancing Analysis with AI: Combining the dataset with tools like ChatGPT allowed for deeper analysis, helping me spot trends I may have missed and refine my approach further.

For any design leader implementing a new process, I highly recommend tracking engagement in a lightweight way. It doesn’t need to be complex, just structured enough to provide insights that help you adapt and continuously improve.

Structured design critique is foundational for improving team maturity and design excellence. If your critiques are ineffective, start small, iterate regularly, and actively seek feedback. Practical critique sessions foster better design outcomes and a stronger, more collaborative team culture.

Thanks for reading